Deep learning has become extremely popular in scientific computing, and businesses that deal with complicated issues frequently employ its techniques. To carry out particular tasks, all deep learning algorithms employ various kinds of neural networks.

In order to simulate the human brain, this article looks at key artificial neural networks and how deep learning algorithms operate.

What is Deep Learning?

Artificial neural networks are used in deep learning to carry out complex calculations on vast volumes of data. It is a form of artificial intelligence that is based on how the human brain is organized and functions.

Machines are trained using deep learning algorithms by learning from examples. Deep learning is frequently used in sectors like healthcare, eCommerce, entertainment, and advertising.

Defining Neural Networks

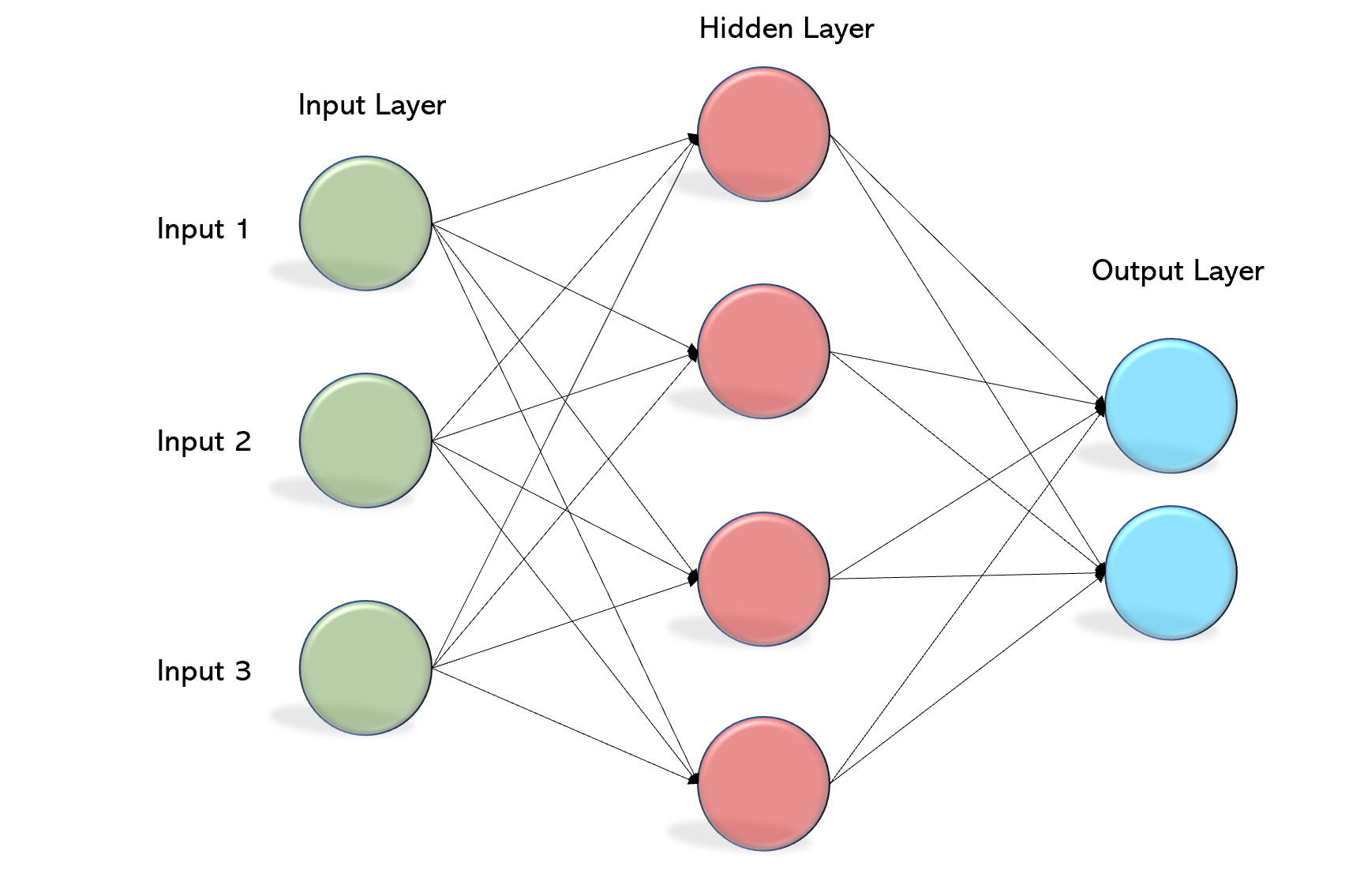

Artificial neurons often referred to as nodes, make up a neural network, which is organized similarly to the human brain. Three levels of these nodes are arranged next to one another:

- The input layer

- The hidden layer(s)

- The output layer

Each node receives information from data in the form of inputs. The node calculates the inputs, multiplies them using random weights, and then adds a bias. To choose which neuron to fire, nonlinear functions—also referred to as activation functions—are used.

How do Deep Learning Algorithms Work?

While self-learning representations are a hallmark of deep learning algorithms, they also rely on ANNs that simulate how the brain processes information. In order to extract features, classify objects, and identify relevant data patterns, algorithms exploit unknown elements in the input distribution throughout the training phase. This takes place on several levels, employing the algorithms to create the models, much like training machines to learn for themselves.

Several algorithms are used by deep learning models. No network is seen to be flawless, although some algorithms are better adapted to carry out particular tasks. It’s beneficial to develop a thorough understanding of all major algorithms in order to make the best choices.

Types of Algorithms used in Deep Learning

1. Convolutional Neural Networks (CNNs)

[CNNs], often referred to as ConvNets, have several layers and are mostly used for object detection and image processing. When it was still known as LeNet in 1988, Yann LeCun created the first CNN. It was used to identify characters such as ZIP codes and numbers. The identification of satellite photos, processing of medical images, forecasting of time series, and anomaly detection all make use of CNNs.

2. Long Short Term Memory Networks (LSTMs)

Recurrent neural networks (RNNs) with LSTMs have the capacity to learn and remember long-term dependencies. The default behavior is to recall past information for extended periods of time. Over time, LSTMs preserve information. Due to their ability to recall prior inputs, they are helpful in time-series prediction. In LSTMs, four interacting layers connect in a chain-like structure to communicate in a special way.

3. Recurrent Neural Networks (RNNs)

The outputs from the LSTM can be sent as inputs to the current phase thanks to RNNs’ connections that form directed cycles. Due to its internal memory, the LSTM’s output can remember prior inputs and is used as an input in the current phase. Natural language processing, time series analysis, handwriting recognition, and machine translation are all common applications for RNNs.

4. Generative Adversarial Networks (GANs)

Deep learning generative algorithms called GANs to produce new data instances that mimic the training data. GAN consists of two parts: a generator that learns to produce false data and a discriminator that absorbs the false data into its learning process. Over time, GANs have become more often used. They can be employed for dark-matter studies to replicate gravitational lensing and enhance astronomy photos.

5. Radial Basis Function Networks (RBFNs)

Radial basis functions are a unique class of feedforward neural networks (RBFNs) that are used as activation functions. They are typically used for classification, regression, and time-series prediction and have an input layer, a hidden layer, and an output layer.

6. Multilayer Perceptrons (MLPs)

The best place to begin learning about deep learning technology is with MLPs. MLPs are a kind of feedforward neural network that contains many layers of activation-function-equipped perceptrons. A completely coupled input layer and an output layer make up MLPs. They can be used to create speech recognition, picture recognition, and machine translation software since they have the same number of output and input layers but may have several hidden layers.

7. Self Organizing Maps (SOMs)

SOMs, created by Professor Teuvo Kohonen, provide data visualization by using self-organizing artificial neural networks to condense the dimensions of the data. Data visualization makes an effort to address the issue that high-dimensional data is difficult for people to visualize. SOMs are developed to aid people in comprehending this highly dimensional data.

8. Deep Belief Networks (DBNs)

DBNs are generative models made up of a number of layers of latent, stochastic variables. Latent variables, often known as hidden units, have binary values. Each RBM layer in a DBN can communicate with both the layer above it and the layer below it because there are connections between the layers of a stack of Boltzmann machines. For image, video, and motion-capture data recognition, Deep Belief Networks (DBNs) are employed.

9. Restricted Boltzmann Machines (RBMs)

RBMs are stochastic neural networks which may learn from a probability distribution across a collection of inputs; they were created by Geoffrey Hinton. Dimensionality reduction, regression, collaborative filtering, regression, feature learning, and topic modeling are all performed with this deep learning technique. The fundamental units of DBNs are RBMs.

10. Autoencoders

A particular kind of feedforward neural network called an autoencoder has identical input and output. Autoencoders were created by Geoffrey Hinton in the 1980s to address issues with unsupervised learning. The data is replicated from the input layer to the output layer by these trained neural networks. Image processing, popularity forecasting, and drug development are just a few applications for autoencoders.